proprioception

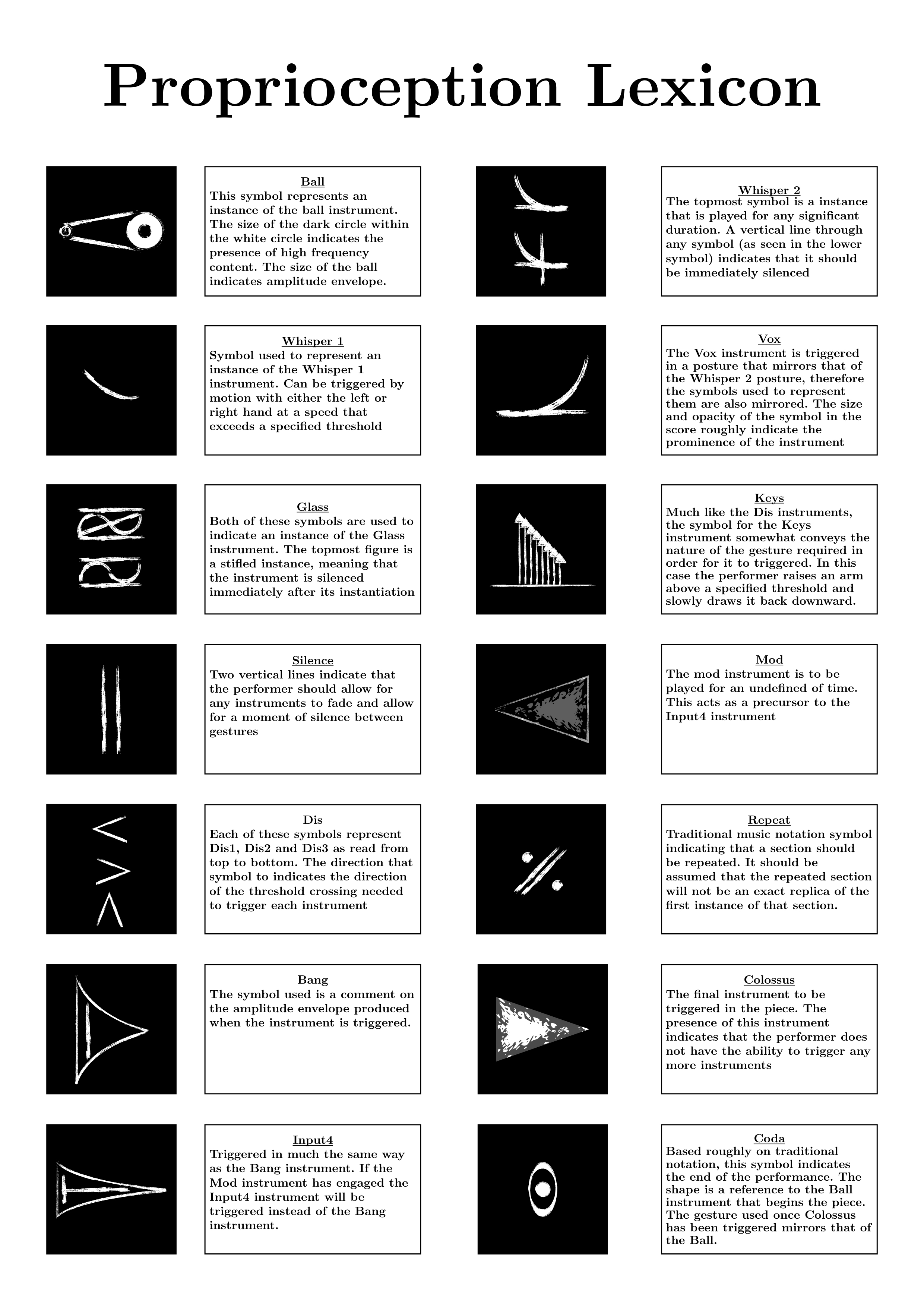

Proprioception is an electroacoustic composition that uses corporeal motion as a means for musical articulation. Using the Xbox Kinect, subtle corporeal motion is used to both trigger and manipulate sound objects in real-time.

Proprioception is part of a series of compositions that I have been working on as part of my PhD research into composing electroacoustic music with the body. In this particular context I use motion and gesture to trigger and articulate sound objects in real-time. My desire to compose electroacoustic pieces that are informed by corporeal input comes from observations that I have made of the disconnect that can sometimes be present between the audience and performers of electronic music.

In this piece, I use the Xbox Kinect sensor in conjunction with the visual media programming language, Processing, to map the skeletal structure of the performer. Certain points on this structure such as the hands, feet, torso, head and many others, are mapped in Processing as points in 3D space allowing me to determine posture, gesture, speed and direction of movement. This data is then sent to the audio programming environment Csound via the Open Sound Control (OSC) protocol.

Performed as part of Noisefloor 2017, Stoke-on-Trent

Performed as part of Sound Thought, Glasgow 2017